Arguably, one starting point of game theory is the idea of a zero-sum game. Ordinary games such as chess have a winner and a loser. Games such as poker, exchange money from the losers to the winner. Of course game theory extends the concept of zero-sum games dramatically to situations that are far more general than recreational games. Still, the idea of a zero-sum game separates the class of all games into two distinct camps: those which are essentially competitive and zero-sum and those that have elements of cooperation in which the total value of the game may grow or diminish. In this regard, constant-sum games are considered to be essentially like the zero-sum games except one can imagine that the two sides agree on some tribute that is exchanged, which lies outside the rules of the game.

I argue that decision process theory has much in common with the theory of games so how does a zero-sum game manifest? Or more generally, how does a constant-sum game manifest? In some ways the question is difficult because game theory has a very specific notion of utility, which has been modified in decision process theory. There are several concepts that might play the role of value, from components of the payoff matrix to the concept of preferences that underlie strategy choices. What is clear however about the concept of a zero-sum game is that something must be conserved. There must be some attribute of the decision process that is unchanged; some attribute whose value has no impact on the strategic choices being made. The most appealing approach to me is to associate such a conserved quantity with a collective strategy that is inactive. Such a collective strategy represents a collective preference whose value plays no role in the strategic outcomes. One such collective strategy would be, in some frame of reference, the sum of the strategies of all the players. Interestingly enough, the sum of such strategies in game theory is also a constant.

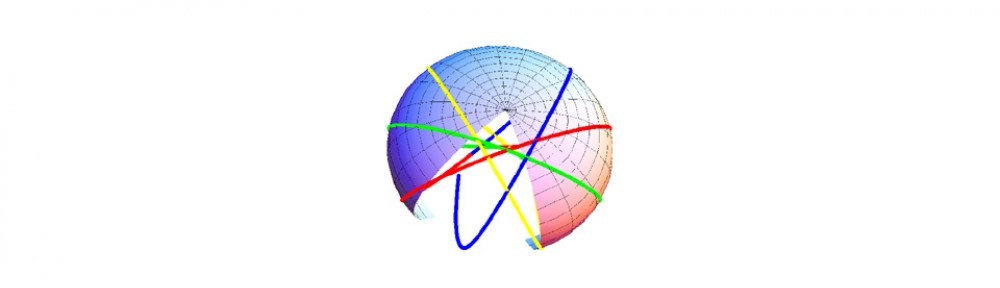

The concept of a collective strategy being inactive is not quite the same thing however as saying that such a strategy is totally invisible. We have to examine in more detail what is meant by a strategy playing no strategic role. In game theory, this means that the fixed point, the equilibrium point, does not depend on the value of that strategy. In decision process theory, this means that there is a conserved momentum associated with that collective strategy which is conserved. The conservation law is set by the initial conditions. If there is a great deal of initial inertia for example, the decision system will behave quite differently than if there is very little initial inertia. These differences are not seen in game theory: zero sum games are characterized solely by their payoffs, so that two games with the same payoffs should behave identically. I think this makes sense in decision process theory as well when you are at a fixed point. However the behavior around that fixed point should depend on the conserved quantities. Metaphorically, if the system circles the fixed point you should perceive differences depending on the amount of angular momentum the system has, even though that angular momentum is conserved.

How does one identify these conserved quantities? In game theory one identifies games for example in which the payoffs sum to zero or to a constant. These systems are competitive in that what one person wins the other players lose in such a way that exactly compensates the win. Thus competition is one way of identifying situations in which there is a conserved collective strategy. I have argued in decision process theory that a conservation law occurs in societal situations in which there is an established code of conduct to which all players adhere. Let us call such a code of conduct an effective code of conduct. A competitive situation could, by a slight stretch, also be considered an effective code of conduct. All players agree that the rules of the game are that whatever one person gains, the remaining players must provide compensation. I suggest that the conserved quantity must depend in some way on the amount that is exchanged. The more value exchanged, the more risk and hence the more interesting the dynamics required to hold such a process near some equilibrium value. Indeed, this may not be possible and the process may be unstable and fly apart, still maintaining these conservation rules.

I don’t have a strong argument from game theory about what these conserved quantities should be. However from decision process theory, I do have a strong argument: there is a unique quantity identified whenever one has identified an inactive strategy. In physical theories this is the momentum, which in Newtonian theories is the product of the mass and the velocity along that direction. In other words, the inertial mass plays a role as well as the velocity. In more general geometries such as we consider for decision process theory, the momentum still has the same qualitative property. I argue that the momentum is the “value” that we should identify with the game theory notion of a constant-sum game. I go from a direction that provides an effective code of conduct to the identification of a value whose sum is conserved; whose sum is constant independent of any and all dynamic interactions. In this way we have generalized what it means for a process to be zero-sum.

The zero-sum value is really that the sum of the time components of the payoff, the “electric field components,” is zero. This is a direct consequence of the decision effort scale being inactive. Consider a different scenario in which all of the relative player preferences are inactive; only the player efforts are active. In this case, for each player, the time components of the electric fields would be equal. Moreover, we could require that there be no closed loop current flows in the player subspace, which would imply no “magnetic fields” orthogonal to the player subspace. This is the requirement of no self-payoffs or factions. Such a model has much in common with the voting game in game theory.