I worked on a large software development project in which the most interesting thing was why the project was delivered two years late to the customer. Both the customer and we, the vendor, knew from the outset what was required for the project. We both agreed on the work that needed to be accomplished and the time it would take to accomplish that work. About half way through the completion of the project, the customer added new requirements, which we as the vendor agreed could be done maintaining the original schedule, but with a known amount of increased effort.

We both looked at the project from a static perspective: we based our estimate of the initial effort on jobs we had done in the past that were similar. We estimated the increased efforts due to additional requirements on the same historical data. This view looks at the completion date as a random event distributed with a normal distribution having a small error. The shape of the distribution, including the standard deviation, is based on the historical data. Despite the best efforts of the development team, the total effort needed to complete the project as well as the delivery time were vastly underestimated.

I believe that the lessons learned from this project are directly related to the need to view the software delivery process as a dynamic as opposed to a static process. We in fact brought in an outside (Systems Dynamics) consultant on the project and learned the following.

-

Our detailed understanding of the project was fundamentally correct: the number of engineers needed to produce a given amount of code didn’t change after the new requirements were added.

-

Our understanding of the quality of the code produced by the engineers didn’t change based on an assessment of their skill set.

-

It was well understood that new hires would be less skilled than those with training in the areas under development. Despite this understanding, standard practice was to not take into account such details when doing cost and schedule estimates. Normally, such differences would generate small increases in costs associated with training.

Though there were many other factors, these three lessons were already sufficient to gain an understanding of why the costs and schedules were terribly out of whack. Let’s say that skilled developers would develop code that contained at most 10% errors. For argument sake, suppose that the delivery of product to the customer would allow 1% error. Suppose further that a test cycle to determine errors would take 6 months and that this was built into the original schedule. One test cycle after initial completion is sufficient to deliver a product of the requisite quality. Now imagine the situation of adding new requirements with the commensurate hiring of new personnel with less experience on the product. Because of the new hires, the initial delivery would contain many more errors, say as an example, 25% errors. So if we start with 100 units, we have 25 units that have errors after the initial pass. After the planned 6 months, we have 25/4 units with errors assuming the same quality of testing and fixing, which is not sufficient to deliver a quality product. This means an additional 6 months of testing, which gets us down to 25/16. To get below the 1 defect requirement, we now need an additional 6 months of testing, to reach the defect level of 25/64. Thus we get an additional year of development because of the change in quality of the new hires.

The actual quality was worse on the project and there were a few other factors, but the essential aspect of the story is unchanged: a dynamic look at the mechanisms demonstrates that small factors lead to unexpected and huge effects. This example illustrates how decisions propagate and impact outcomes. Decision process theory provides a theoretical foundation for such effects that is illustrated in the various models that we have detailed in our white papers. In this software example, we were misled when we assumed certain effects were static, such as the quality of the engineers. We assumed that the engineers would instantly become experts, ignoring what we equally well knew to be true that a training period was needed to make that happen; such a training period could in fact be a couple of years, well beyond the time we allowed for a development cycle.

There are examples in physics where we also make such assumptions, which don’t usually cause problems, but again can lead to incorrect results. A mechanism that is closely related to decision process theory would be that of gravity. In physics we assume that for most purposes, gravity is static. Under extreme conditions however, this is a bad assumption. If our sun were to explode, we would not feel the gravitational effect for several minutes because of the time it takes for the cause to make its effect felt. Such effects might be labeled gravity waves, despite our incorrectly labeling of gravity as a static scalar field.

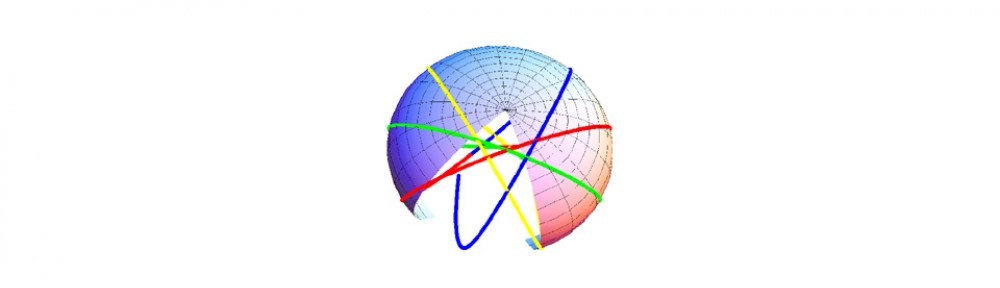

In decision process theory, it is also true that causes generate effects that are separated by a finite time. There is always a propagation speed: effects are never instantaneous. In our models, in our model calculations we have focused initially on streamlines, which are paths along which the scalar fields are constants. For a picture, imagine the motion of air with smoke; the smoke provides visual evidence of the behavior of the streamline. One specific model might be of someone speaking, whose voice generates sound waves. We would capture the streamlines as displaying a global wave pattern, one that is not visible on any one streamline. Once sound waves are generated, we would see the streamlines undulate: the path of a velocity peak would propagate with a form we call a harmonic standing wave, analogous to shaking a jump rope whose other end is attached to a wall. This wave velocity is quite distinct from, and often much faster than, the media velocity.

Here is a model calculation using Mathematica of what a harmonic standing wave looks like in decision process theory for an attack-defense model:

[WolframCDF source=”http://decisionprocesstheory.com/wp-content/uploads/2012/08/network-waves-pressure-plot.cdf” width=”328″ height=”317″ altimage=”http://decisionprocesstheory.com/wp-content/uploads/2012/08/network-waves-pressure-plot.cdf”]